SPT v12n1 - The Coding of Technical Images of Nanospace: Analogy, Disanalogy, and the Asymmetry of Worlds

The Coding of Technical Images of Nanospace: Analogy,

Disanalogy, and the Asymmetry of WorldsThomas W. Staley

Virginia TechAbstract

This paper argues that intrinsically metaphorical leaps are required to interpret and utilize information acquired at the atomic scale. Accordingly, what we 'see' with our instruments in nanospace is both fundamentally like, and fundamentally unlike, nanospace itself; it involves both direct translation and also what Goodman termed "calculated category mistakes." Similarly, and again necessarily, what we 'do' in nanospace can be treated as only metaphorically akin to what we do in our comfortable mesoworld. These conclusions indicate that future developments in nanotechnology will rely, in part, on the creation of more sophisticated metaphorical codes linking our world to nanospace, and I propose some initial possibilities along these lines.

Keywords: Nanotechnology, Imaging, Phenomenology, Metaphor, Scale

Introduction

In this paper, I want to explore some features of images used in contemporary nanotechnology in order to establish a strategy for enhancing visualization practices toward particular achievable goals. I propose this approach in response to a particular species of 'nanohype' evident−or at least implicit−in such images. I am less interested in what we might call 'delusions of grandeur' or 'flights of fancy' in the domain of nanotechnology than with the pragmatics of scientific visualization strategies at this scale. The many, effectively fictional, depictions of nanotechnological prospects that have caught the recent attention of the public−such as miraculous blood-cleaning nanorobots and panacea-like medicines tailored to individual genetics or, on the negative side, the dangers of rampant 'grey goo'−are not my concern. Instead, I focus on a different sort of hype being generated in expert circles. These claims, associated with new scientific imaging traditions, encourage us to believe that recent instrumental advances constitute a truly deep shift in our understanding of, and access to, the atomic scale.

Much of the recent interest in such images has involved this sort of partisan enthusiasm, with vivid depictions suggesting possibilities for atomic-level engineering far beyond current practice. For example, through the circulation of synthetic instrumental images showing arrangements of a few dozen atoms on a carefully prepared surface, we are easily led to the false impression that engineers can now control atoms with the same ease that a child controls wooden blocks. Simultaneously, the supplementation of instrumental data with sophisticated routines for image manipulation has become standard practice in the field. Access to computer image enhancement, stemming from advances in speed and volume of information processing capacity, lends investigators great flexibility in image presentation. The bulk of this paper will inquire into implications of these developments with respect to contemporary nanotechnology, presenting a case for a realignment of practices with basic objectives that can serve both scientific and human ends.

Explicit claims that we have developed sophisticated technological inroads into the nanometer scale regime seem to depend on either (a) an instrumental interface that is information-rich or (b) an instrumental interface that is phenomenally-rich. The former sort of claim is often formulated in terms of our new ability to really 'see, understand and control' the atomic regime. In the latter, the claim is rather about our now being able to adopt a subjective perspective equivalent to 'being at the nanoscale.' The two are not mutually exclusive, but do represent independent instincts that I will analyze more fully below. Each is reflected in recent discussions of trends in nanoimaging in the scholarly literature. In response to these analyses, I contend that such practices have in fact done relatively little−given their potential−to enhance our understanding of atomic-scale phenomena. By analyzing these sorts of images in comparison to a set of specific technical ideals that they can reasonably aspire to achieve, I hope instead to promote modesty about current imaging trends as well as to indicate some avenues for improvement of them with respect to the informational and phenomenal desiderata just described. In pursuit of this goal, I will examine a different conception of image making geared toward the production of richer models of the atomic regime. I hope thereby to replace nanohype with a degree of 'nanohope'−the pragmatic possibilities of bringing the atomic and human scales into better contact.

This project will involve the consideration of images at a variety of interrelated levels: Not only individual physical ('actual') images [ Image 1 ], but also individual mental images [ Image 2 ] and mental image sets or frameworks as well [ Image 3 ]. These last serve as a means for our interpretation of both kinds of individual image, and can also usefully be regarded as mental 'worlds' (or world analogs) insofar as they offer putatively comprehensive models of a physical domain (or actual 'world'). For example, I will use 'nanoworld' as a group term to indicate the phenomena of the nanometer scale taken as an ensemble. Our mental image of this nanoworld is not a single item but a developmental framework, akin to that constituting our 'normal' human world. These various dimensions of 'imaging' will emerge more clearly in the course of my discussion, but I will begin by posing a question about the relationships among these levels.

What can and do we expect to glean from interactions with this nanoworld given that imaging technologies are an inherent part of the process? As I will develop below, the use of images as media between human experience and phenomena is always a hybrid process involving different possible modes of interpretation. My question is thus at once semiotic, epistemological, and ontological in character. That is, considering together the three senses of image just discussed, explaining our mediated interactions with the nanoworld involves sign systems, knowledge processes, and structures of being. I will link these aspects of the problem by examining a variety of perspectives (cognitive psychological, aesthetic, pragmatist, and phenomenological) on images offered in the philosophical literature. Using this framework, I will argue that imaging codes can be made more sophisticated by mutually accommodating these various possible modes of interpretation more fully and more densely. In a 'scientific' mode, this equates to the transmission of information at a maximal efficiency. From another more common-sensical perspective, however, the goal is better construed as achieving fuller phenomenal contact between the subject and object of inquiry. To obtain these goals together most effectively, I propose that we can utilize a systematic imaging strategy that I will describe through the notion of a 'technical image' combining a scientific modality (in principle, readable by machine) and a manifest modality (in principle, always referred back to an existential agent).

I further suggest that closer attention to the encoding of images reveals, in the particular case of atomic scale phenomena, important and inextricable disanalogies with normal experience. This, I will argue, is a direct result of the metaphorical character of nanoscale images; images capable of conveying a rich functional representation of the atomic regime must do so by significantly reorienting our phenomenal expectations.

Metaphorical leaps are required to use and interpret atomic scale information because of fundamental disanalogies between the relevant ambient phenomena of that scale and those of our own. That is, the processes and structures that we wish to monitor and manipulate in the nanometer regime differ constitutively from those that we are familiar with through our various naked senses. Of course, differences of this sort are not unique to the atomic scale; certain reorientations of sensory expectation must occur with many phenomena that we access through technological instruments. Users of binocular optical microscopes, for example, must not only learn to physically refocus their eyes but must also deal with an unfamiliar combination of reflected and transmitted light in viewing samples. Thus, while some elements of vision enhanced by such a microscope remain analogous to our 'normal' experience (relative sizes and temporal simultaneity of the entire visual field, for example), others can differ substantially (the significance of color within the same object, and even apparent shape). Users of such instruments, as part of the process of developing expertise, must learn to accommodate these new phenomenal regularities in order either to understand what they are seeing or to work with the objects under observation. Depending on the instrumental situation, new tactile habits or other sensory-motor readjustments may be required. Such human-technological experiences create, in effect, circumscribed new worlds as supplements to our everyday one. As my development below will argue in detail, this process is a complex one involving multiple experiential modalities that mutually adjust to one another. In particular, accommodating a new instrumental interface into our experiential palette reorients both our formal theoretical expectations and our common-sensical ones at the same time.

These are obviously relative accommodations. Rarely are all of the components of our experience put into flux at once by a new instrumental interface with the world. But this is part of my point: What makes the nanometer scale a special case is precisely the degree to which the forces, structures, processes, and events of interest defy the expectations of the human-scale. This is not an absolute or unique barrier but it is an especially conspicuous issue for the case of nanotechnology. To see why this is the case, let us now turn to some specific analytical approaches to imaging.

The Technical Image

The central notion I will use in my exploration is that of the technical image . By a technical image, I mean an actual image that is systematically encoded. Thus, the technical image appears as an ideal type−an image, in my framework, is more or less technical depending on the degree to which its features are determined either by an established semiotic code or by singular discretionary choices. At the opposite end of the extreme from the technical image would be an entirely non -technical image whose features are the result only of individual decisions introduced by the image-maker. With a different flavor to the terminology, we might similarly describe the technical/non-technical axis as being one dividing the domesticated or disciplined from the wild or feral. The distinction in question, as the examples below are intended to indicate, is one between a domain of features exhibiting predictability and commonality on the one hand and one of discretionary, unbounded characteristics on the other. The systematic encodings of the technical domain lend themselves to the formulation of collective imaging traditions in two senses: (1) that of grouping images themselves collectively, as in atlas-like compendia or comprehensive image environments, and (2) that of organizing intersubjective knowledge communities around the image system.

This perspective is amplified by reference to Daston & Galison's recent treatment of modern traditions of imaging and objectivity ( 2007 ). In this work, they detail how a tradition of image formation by means of machines emerged in the nineteenth century formal sciences, and document the extent to which such instrumentally generated images still depend on superadded interpretive codes for their interpretation. Despite the pursuit of 'objectivity' that this historical tradition endorsed, the products of the enterprise thus instead epitomize a particular strategy of instrument-centered 'intersubjectivity' accomplished through complex technical codings. This observation shifts our attention from absolute distinctions between scientific and other images to the details of these coding practices themselves.

Since all images contain codes of one sort or another, a few familiar examples may help to indicate some initial distinctions in this regard. Take, for example, a black and white photograph. At a basic level, the photographic image encodes the position of spatial objects by recording optical contrasts. The patterning of black and white components actually recorded on film is systematically related to properties of the objects in the image field, and it is a replicable feature of the device. Thus, it represents a 'technical' encoding in my sense, at least at an idealized level of analysis. But we encounter complications in separating the technical aspects from other ones. First, the systematic relationship itself is a complex and usually unknown one involving many factors−properties of the film, exposure, lenses, and other camera parameters as well as external light conditions. Furthermore, the pattern encoded on film is rarely the image of direct interest in a photograph. Rather, we encounter the developed and printed translation of the camera's recording, introducing yet another level of variables, some perhaps systematic in character but others irreplicable and uncontrolled. Nonetheless, the interpretive frameworks we employ for photographic images obviously provide a sufficient level of intersubjectivity to allow for widely shared translations in many circumstances. To the extent that such images, in conjunction with our frameworks for use, can transmit unambiguous information content, their technical character remains a practical - and not merely an ideal−matter.

While machine-based strategies like photography are a common approach to introducing systematic encoding, my notion of a technical image also embraces features independent of instrumental recording media. Classical traditions of painting, for instance, also exhibit many systematic features. For one, as Patrick Heelan's classic analysis ( 1983 ) details, the use of linear perspective introduces a technical encoding of spatial relationships far different from the one the human eye itself perceives. Correspondingly, our ability to adapt to and intuitively interpret such features is indication of a flexible capacity for systematic mental imaging in ourselves. Technical interpretations, though, remain a special case of our readings of images: In some symbolist traditions, color takes on a technical significance in paintings in addition to its naturalistic or common-sensical one. Yet systematic encoding of meaning through color is effectively independent of the function of color in the visual depiction itself. That is, we can recognize a person depicted in such a painting without registering the technical role that tshe particular colors selected for that depiction might be meant to play. In effect, the technical interpretations exist as an elective subset of the general interpretations−a notion I will return to in later sections.

We observe also, from these examples, that systematic codings may be either open or closed in their parameters. In the sense I am using the term, closed translational systems are those employing a code that comprehensively maps a particular phenomenal space or dimension into an image. One example of such a system is the 'color wheel' familiar in contemporary computer software, allowing selection of a given tint for a virtual object. This wheel maps all available colors into a closed system of choices that, importantly, is also structured into a set of comprehensive relationships– a circular space of related individual options (or more precisely, given the independent axis of black-white options, a cylindrical or elliptical one). It is a good formal model for the notion of the technical image I am proposing, insofar as it exhibits not only systematic and finite encoding of a continuum but also a phenomenal relational structure that delimits and shapes what the system as a whole can be used to represent (at most three dimensional phenomena, with special relationships of similarity and difference dictated by the color structure, etc .). However, such systems are not the only ones that exhibit what I am calling 'technical' character. 'Open' translational systems need exhibit no such comprehensive coverage of a dimensional space, nor any such special relationships among individual components, yet they can still qualify as technical in my sense if they exhibit translational systematicity. For example, the iconography of maps or road signs is encoded in a systematic way (one-to-one correspondence between an icon and its referent-type). Yet, it need not cover a continuous phenomenal space of possibilities, nor need there be any particular relationship among icons (beyond such trivial qualities as being perceptually distinguishable). While there often are certain discretionary relationships in iconic codes−such as the use of related symbols for settlements of different sizes on a map−these are matters of associative convenience rather than functional necessity. That is, they are choices rather than the outcome of systematic constraints included in the form of the code itself.

These coding possibilities are evident in some familiar examples from cartography: The representation of shapes or locations of geographic features represent technical features of a typical map, as they are constrained by the characteristics of the chosen projection. Other mapmaking practices such as the coloring of adjacent nations in contrasting tones are non- technical as they are effectively subjective or arbitrary in character. But we should not confuse non-technical with non-practical, nor subjective with illogical here; there are plenty of practical and logical reasons for distinguishing nations by color on a map and also to use particular color combinations to do so. The important distinction here is whether the elements of the code stand in constrained relation to one another as a condition of the code's operation as a whole (technical) or whether these relationships are 'picked' without impacting the code overall (non-technical).

Many cartographic encodings blend these approaches, as with the representation of the population and political status of cities by a set of icons that fuse a systematic representation of size with discretionary markers for capitals or other special features. In practice, most images−even in natural scientific practice−combine features of both extremes as well. However, I will be arguing here for a greater attention to technical images as a particular domain where our imaging traditions could be enriched. Even if purely technical character is only an ideal when applied to actual images, technical structure can still be achieved in images as a matter of degree. Furthermore, I will argue that this is a beneficial approach. Thus, my intention here is admittedly normative and prescriptive, and I will value one set of imaging practices relative to other interacting ones on the basis of a number of specific criteria.

To clarify these commitments, it will be helpful to consider more fully some of the different sorts of images that would qualify as technical by this definition, as the class I am trying to describe covers a number of superficially disparate domains. The three salient kinds of technical image I will address here are: (1) instrumental images −phenomenal traces (visual or otherwise) that encode and record one or more properties of an object by means of an intervening machine; (2) model or map images −representations of salient characteristics of an object or objects; and (3) virtual reality [VR] or simulation images −phenomenally-immersive presentations of the subject within an environment of objects. I do not regard these as clearly demarcated or mutually exclusive domains of images, but a separate consideration of these three types will reveal different implications of the perspective I am proposing.

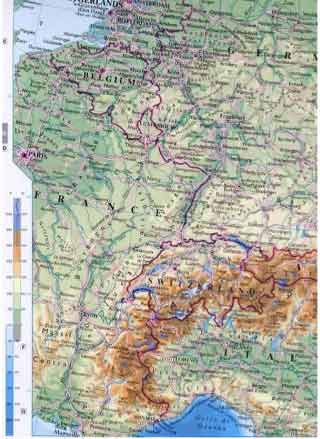

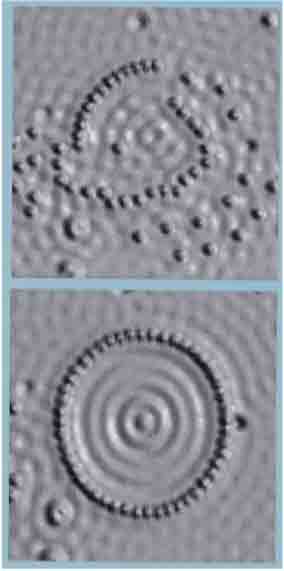

The primary distinctions I wish to draw are one dividing the typical instrumental image from the typical map or VR image, and one dividing the VR image from the other two. In the former case, there is usually a distinction in terms of richness of information content , with most instruments operating along what we might call a single phenomenal 'channel' and most maps and VR simulations being 'multichannel' in character. That is, in a typical instrument for probing the nanometer scale−such as a scanning tunneling microscope−we are presented with a visual image that, no matter how synthetic it is in formulation, is conveyed to the user via a single unitary phenomenal coding scheme. An example of this is seen in the visual black-and-white contrast images in Figure 3 below: all of the information content in this image is contained in this B/W coding 'channel'. In a typical map image (such as the political-geographic type in Figure 1 , discussed below), a multiplicity of overlapping codes are employed: one for land elevation, another for water depth, others for population centers, regions, et cetera . By utilizing multiple coding 'channels', the map image−and similarly, the virtual reality image−is capable of conveying more about its subject than would be possible by a single visual code. The richness of information content thus encapsulated can be quantified by reference to the complexity of the symbol system required to encode the image.

A different axis divides the VR simulation from the instrumental trace or map: a dimension of richness of phenomenological access . That is, the VR simulation attempts to place the observer into a constitutively interactive sensory milieu where the boundary between the image and the observer's environment disappears. While producing such a perceptual fusion may often entail an information-dense simulation medium (as described in the previous paragraph), the goal of perceptual acquaintance here is not congruent with achieving information-density. What is sought is instead a convincing alternative experience. As one example of a phenomenal 'virtual reality' not reliant on information-dense processes of re-coding, consider the zero gravity simulators used by the U.S. space program to train astronauts. This alternative reality is created by means of an aircraft flight path in which passengers temporarily experience weightlessness in controlled free-fall. This process provides a compelling and immersive phenomenal experience, but not one especially information-dense in nature. Of course, in formulating a virtual reality simulation of nanospace, we might think more in terms of an alternative visual experience, but here too the goal remains orthogonal to that of information density: However much information might be packed into a virtual 'nanoenvironment', the primary project remains creation of a qualitative apprehension of 'being there.' It is this quest for phenomenal richness that is at the heart of virtual reality projects. In more usual images, the image appears as part of the observer's environment and the sensory capacities of the observer are not experientially fused with the imaging device. As such, the images produced by instruments or constituting maps differ fundamentally in their intended purpose from those proffering a virtual reality experience.

The definition I have proposed for the technical image, the practical traction that I think the notion provides, emerges from a conception of these two kinds of richness−of information content and phenomenological access−as desiderata. In the domain of technical images, I contend, we have a strsategy for enhancing both of these dimensions of our experience of phenomena. Nonetheless, I do not wish to characterize technical images as good images and others as bad or inferior. Nor do I want to equate the technical image solely with instrumental images used in contemporary natural science. Further, the technical image is not necessarily equivalent to the informationally-rich image or the phenomenally-rich image. However, I do want to maintain that images that are technical in my sense are capable of achieving particular pragmatic ends, including levels of phenomenological access to unobservable phenomena and levels of information density that other images do not provide. Technical images obtain these advantages by way of a coding scheme that accommodates systematic disanalogies and analogies within the image itself, translating into our own experience an information-rich depiction of nano-phenomena that is simultaneously compelling within a human perceptual framework.

Insofar as they are images , they exhibit general semiotic characteristics that I contend are best interpreted as a type of visual metaphor. Simply put, I suggest we consider images (in the context of this paper) as symbols for phenomena, and therefore potentially similar to or different from those phenomena across a partial range of qualities. I will thus begin my discussion by examining the notion of visual metaphor itself and the advantages provided by considering images−and nanoscale images in particular−in this light. Insofar as they are technical elements of images, in my terminology, these semiotic characteristics also take on a particular form. This form restricts the technical image to certain representative strategies, but provides it uniquely useful capacities as well.

To demonstrate this latter point, I will draw parallels between my conception of the technical image and some related epistemological and ontological distinctions stemming from the work of Wilfrid Sellars and Maurice Merleau-Ponty. With this conceptual apparatus in hand, I then turn my attention to some different basic types of images pertinent to contemporary nanoscale research. I use these to emphasize the positive potential of technical images to enhance, in two ways, our access to the regime of the very small. I then show how a focus on these capacities of the technical image meshes with two other analyses of nano-imaging by Don Ihde and Felice Frankel.

Visual Metaphor

I have said that my notion of a technical image relies upon the degree of systematic encoding involved in the production of the image. What precise role, then, does a systematic encoding play in producing a particular relationship between the phenomena being imaged and the observer? My explication will proceed in two parts, the former positioning image-making as a species of visual metaphor and the latter (in the next section) examining images as potentially productive of new experiential worlds.

Discussions of visual metaphor have been most prominent in two domains of inquiry: art history and aesthetics (as in Goodman ( 1976 ), St.Clair ( 2000 ), and Carroll ( 1994 )) and cognitive psychology ( e.g. , Lakoff & Johnson ( 1980 , 1999 ) and Seitz ( 1998 )). However, I maintain that this is also a useful framework within which to consider imaging technologies. The above-cited review by Seitz examines different models of metaphor and concludes that the best approach to the semiotic role of metaphor is a cognitive, or symbol systems, perspective. Seitz suggests that such a view is compatible with the work of both Goodman and Lakoff & Johnson, but uses Goodman's Languages of Art: An approach to a theory of symbols ( 1976 ) as a primary reference point. This model understands metaphor as not exclusively or especially verbal, but rather a general process of "the transfer of properties across symbol systems." ( Seitz, 1998 ) By means of such encoding processes, we establish an economy of analogies and disanalogies−what Goodman calls a set of "calculated category mistakes"−among different symbolic regimes.

For example, both the mesoworld and the nanoworld are known to us through mental frameworks or models ( Image 3 ). Interactions between ourselves and those worlds is enabled by these mental frameworks, which are themselves constituted by symbol systems. But the nanoworld exists for us only through instruments, and principally through instrumental images ( Image 1 ). In Seitz' terminology, these images are the medium for 'transfer of properties' between the nanoworld and the mesoworld. From Lakoff & Johnson's similar perspective, the nanoworld would instead be regarded the 'target domain' that we attempt through metaphor to understand. The 'source domain' from which we draw our metaphors, is the mesoworld. But since the actual images that serve as our medium are themselves phenomenal mesoworld entities, they intrinsically limit the potential for creation of productive analogies with the nanoworld. When coding images systematically by way of a scientific algorithm, we are also necessarily coding them within a particular perceptual framework for interpretation. Thus, in suggesting that we should regard the technical image from this perspective as one that is systematically encoded, I am marking out a special status for those images in which the use of visual metaphor is effectively predetermined by a schema of constraints on content. These constraints are productive ones in the sense that they provide an avenue for the creation of metaphorical bonds between not just individual images ( Image 1 or Image 2 in my earlier development) but between entire contextual image frameworks ( Image 3 ).

In the next section I will supplement this perspective with some additional notions about mental images and frameworks for their interpretation, but some initial observations that point toward these developments are possible now. The metaphorical links described above organize our experience into what might be called either 'experiential gestalts' or 'lifeworlds'. As already suggested by my use of 'nanoworld' and 'mesoworld,' these frameworks are ultimately local in their coverage and many can exist in our minds without need for coherence. It is this multiplicity of contexts that demands the creation of symbol systems for translation. But, returning to Goodman's descriptive phrase, only in some such worlds can the 'calculated' disanalogies involved between symbolic 'categories' properly be considered 'mistakes.' Some interpretive frameworks−including those we use to systematically encode images−depend constitutively on cross wiring of categories in this way and within such a domain such decisions are anything but mistaken. However, in frameworks of a different kind−those directly dependent on phenomenal expectations, for example−we do run the risk of mistaking source and target domains in the sense Goodman indicates. I will reconsider this distinction in my discussion of specific nano- imaging examples below.

Returning for now to the familiar regime of mapmaking, we observe that the content of a typical relief map in an atlas is effectively predetermined by means of the color, icon, and other codes described in the legend. Each of these symbols is functional within a larger system, with color typically coding both for elevation and for correlated phenomena such as terrain or ecology (blue for water, green for low-lying grassland, brown for hills, gray for mountains, etc .). These exemplify the salient characteristics of the technical image, which ideally would contain only rigidly encoded information, with the basis for the coding (which we might also call Image 3 ) determined prior to formation of the particular image ( Image 1 ). The technical features of the coding are also, importantly, adjusted to characteristics of the human perceptual system. For example, while the particular choices of tones used for elevation and depth can be informally correlated to environmental features (as just explained) they also, as a set, lie in a perceptual continuum. The land elevation colors−while muted−are arranged in a spectral order: not the canonical seven-fold 'ROYGBIV' spectrum running from Red to Violet, but one approximating an 'IVROYG' ordering from Indigo to Green in nine tonal increments. The remaining blue tones used for water are ordered by a black-white value component. Thus, the arrangements take advantage of a shared pre-formed perceptual coding of colors that allows for easy interpretation by analogy.

Figure 1: A typical cartographic representation of a region of central Europe, excerpted from the Oxford Atlas of the World, eleventh edition (2003).

Taken as a whole, this is an image that is both systematically like and systematically unlike the things it portrays. Furthermore, its coding scheme−shared and explicit throughout the atlas− provides a fairly comprehensive template for acquainting ourselves with and interpreting the entire set of images therein. It employs this ordering of already familiar perceptual categories (Lakoff and Johnson's 'source domain') to refer to another finite set of phenomena of interest (the 'target domain'). In a technical context, the visual metaphors that are introduced between target and source provide an avenue for effective translation. In this respect, it is the translational system itself−what I called above an 'economy of analogies and disanalogies'−that is of primary interest, directing our attention to matters like the arrangement of scalar continua and icon keys. Yet, the image also importantly participates in another interpretive context at the same time, one that demands that the map be 'like Europe' for us in a fundamentally different way. It is in this context where particular code choices such as mimicking grassland and mountain colors are generated−the decisions that render the map a compelling portrayal of its subject and lend it a degree of similarity to an aerial photograph of the same region. To distinguish these domains more clearly and relate them back to the two technical imaging desiderata I introduced in the previous section, I will now turn to some related concepts about images already available in the philosophy of science and technology.

The Technical Image & Philosophical Image Worlds

My development here will rely on a synthesis of pragmatic and phenomenological perspectives on images. I will begin by suggesting a way to understand my perspective on technical images within the 'stereoscopic' synthesis of manifest and scientific viewpoints proposed by Wilfrid Sellars. It will emerge that a central difference between my perspective and Sellars' is that between a discussion of literal images utilized in a social milieu and one of mental images employed in the individual mind. Nonetheless, I believe that Sellars' position amplifies my own by clarifying the relationship between systematically-constrained semiotic codes and the discretionary ones of day-to-day existence. To further develop this relationship, I will move from Sellars' system of image spaces to an interpretation of Merleau-Ponty explicated by Dreyfus and Todes ( 1962 ). This will shift the discussion into the domain of embodied phenomenological 'worlds', where I will suggest some consequences of my notion of a technical image for the extension of our lived experience into the domain of unobservables. This argument relies on the already established conception of images as a form of (primarily) visual metaphor, wherein levels of systematic analogy and disanalogy with our typical experience serve to delimit our phenomenological access to certain phenomena.

The distinction I propose between technical and non-technical images is first and foremost about actual physical images ( Image 1 ). Nonetheless, it is importantly congruent with a mental image distinction drawn by Wilfrid Sellars in his seminal paper "Philosophy and the Scientific Image of Man" ( 1962 ). Therein, Sellars argues for an epistemology that preserves in "stereoscopic" perspective two ways of seeing the world. One, the manifest image, represents the 'common- sense' picture of the world we develop through the mediation of symbol systems in general. The other, scientific, image is an outgrowth of the manifest view that whittles down the contents of the world to a set of physical structures and properties that are systematically related to one another in a theoretical frame. A proper philosophical understanding of what our knowledge consists of must, Sellars contends, retain both perspectives in order to do justice to our experience. It is in the same spirit that I suggest we distinguish technical images−images that will, in Hacking's famous terms, allow us to "represent" and "intervene" in a technoscientific manner−from non-technical ones that provide us only a commonsensical and ad hoc depiction of phenomena. My intention is not to eliminate one sort of image-making tradition in favor of the other, but to identify the special characteristics of one with respect to the other and thereby to highlight the complementary nature of the two - in short, to consider the technical image, and the prospects for its enhancement, in 'stereoscopic' terms. These stakes will be more obvious when we introduce an explicit phenomenological element to the discussion. This will allow us more easily to make the leap from Sellars' mental images to the role of actual technical images in creating a mediated experience of theoretical phenomena.

While Sellars' immediate concern is to relate the formulation of scientific theories to our mental process at large, Maurice Merleau-Ponty's approach to images begins from a different standpoint. The latter's Phenomenology of Perception ( 1945/1962 ) addresses the problem of how technological artifacts such as images assist us in broadening our existential milieu. In this pursuit, he is insistent that we must regard the mental states discussed by Sellars as embodied phenomena instantiated through physical entities. Merleau-Ponty thus emphasizes, in a way Sellars does not, an explicit role for actual images (my Image 1 ) in his discussion of the human- world interface. Since for him both our own existential situation and the phenomena of the world are co-products of a relational process of development, actual image media and their phenomenal qualities are of crucial interest in establishing our orientation to the world.

Merleau-Ponty's attention to embodiment helps to link Sellars' model back to our earlier discussion of visual metaphor and its limitations. In particular, Sellars' exclusive focus on an idealized mental image domain does not, by itself, indicate clearly the systematic constraints that are imposed on coding systems by our existential status in the world. While Sellars and Merleau- Ponty are equally adamant that we regard our knowledge processes as mediated through acquired image frameworks ( Image 3 ), the phenomenological perspective on imaging technologies presented by Merleau-Ponty strongly attaches constraints of physical constitution to the problem of mental framework development. This move could be interpreted as one from issues of knowledge systems to those of an emergent 'natural' ontology but the broader view proposed by Merleau-Ponty identifies these as inextricably connected. Given this relational state of affairs, a shift from epistemological to ontological concerns is best construed not as an absolute disjunction but as a difference in emphasis−from 'what we can know ' to ' what we can know.'

The complementarity of the Sellarsian and Merleau-Pontian approaches is further reflected in the similar analytical structures that they provide for distinguishing among image frameworks. In a paper explicating Merleau-Ponty's work, Dreyfus & Todes ( 1962 ) argue that we can understand him as proposing three distinct experiential 'world'-types characteristic of our distinctively embodied existence. These are: (1) a pre-personal world , (2) a lifeworld , and (3) a scientific world , each of which represents a particular interpretive mode. Despite important differences between Merleau-Ponty and Sellars with regard to the implications of these experience- structuring 'worlds', this framework can be understood as according with Sellars' model of stereoscopic manifest-scientific existence. This is especially the case when we note that Sellars' model is essentially tripartite as well, including what he terms an "original" image alongside the manifest and scientific ones he attends to in most detail.

With this additional apparatus in hand, perhaps we can begin to comprehend the intimate connections advertised at the outset of this paper between various aspects of imaging. The common ground between the Sellarsian original image-manifest image-scientific image conception and the Merleau-Pontian prepersonal world-lifeworld-scientific world conception is, I take it, the following: In each level of the two systems, we can identify a particular modality of human experience encompassing semiotic, epistemological, and ontological concerns. Further, the qualitative character of these modalities is largely the same in both philosophical schemes. Mode I is a space of perceptual possibility constituting a transcendental limit on experience. That is, both Sellars' original image and Merleau-Ponty's prepersonal world are domains where the relationship of the subject to its environment is entirely unclear and waiting to be resolved by experience. This mode sets limits on what can be a sign for us, what we can know, and what we can take the world to consist of; it is an effective bound on what experience we can develop. Nonetheless, the sensory-motor potentialities with which we are endowed in this state can develop into Mode II by a process of categorization. Mode II, the manifest image or lifeworld state, is one in which the status of subjects and objects has been clarified and we exist in a richly phenomenal milieu stabilized by experience. Mode III, the scientific image world, is one populated not by phenomenal objects but by theoretical entities standing in constrained relation to one another. In other words, the scientific world is one bounded by postulated structures and properties regarded as underlying normal experience. While more phenomenally sparse than the Mode II world, it consequently provides instrumental advantage for us, allowing us to obtain what Merleau-Ponty calls "maximum grasp" on entities for purposes of intervention in our environment.

What do these models add to my discussion of the technical image? The progression from Mode I to Mode II to Mode III traces a focusing process in which we obtain leverage on the world by developing semiotic systems of representation and intervention. Images of various types play fundamental roles in this process. Furthermore, the three sorts of technical images I have identified address these modes differently and thus demonstrate different implications of valuing technical images. The Mode III 'scientific' world is one based on finite algorithmic characterizations of phenomena, especially as formulated quantitatively. The leverage provided by such characterizations is a direct result of this minimalistic and circumscribed framework ( Image 3 ), not only because of its representational effectiveness but also because of its related capacity for enabling action. As Ian Hacking's ( 1983 ) account of representing and intervening reminds us, these two aspects of the scientific project are related facets of the same problem− attaining "maximum grasp" is simultaneously a matter of depiction of, and intrusion into, a phenomenal domain.

At first glance, this Mode III role might appear to cover the significance of the technical image as I have described it; if systematic encoding is the salient feature of such images, then the scientific modality, as just described, appears to provide a reasonable framework for their formulation and use. The model provided by Sellars, centered as it is on the mental domain, might accommodate this move. However, as my development up to this point has tried to indicate, the embodied situation detailed by Merleau-Ponty militates against such a tidy characterization. To obtain this kind of theoretical systematicity, we and our images must take part in actual phenomenal processes. In so doing, we encounter obstacles related to the other two, non-scientific, modalities. These help to indicate, in a way that Sellars' own discussion does not, definitive 'manifest' elements of the technical image that must be taken into account.

The Mode II domain of Sellars' 'manifest image' and Merleau-Ponty's 'lifeworld' is the framework within which the processes of visual metaphor actually operate within our embodied minds when interpreting an image. This domain is quite flexible in its capacities for structuring phenomena, especially when using instrumental supplements. We can simulate red-green color blindness using special lenses, and thereby also resystematize the significance of color. We can also superimpose instrumental images (such as infrared or ultraviolet 'night vision') onto our normal visual mode. Yet this flexibility has limits dictated by the Mode I domain. We cannot, for example, introduce a new color between yellow and orange in the spectrum (infinite gradations between them, yes; but a distinct new intermediate, no). Nor can we introduce a new spatial dimension into our visual field in the way we introduce an orthogonal component into an equation. As such, both Modes I and II are directly relevant to the analysis of even technical images of the kinds I am considering.

In the case of instrumental traces, the constraints placed upon a technical image are artifacts of the device itself. This is the classic sort of technological mediation described in innumerable sources from Heidegger's hammer onward; the device effectively serves as a surrogate subject for us, recording phenomena we might not otherwise observe. In essence, the instrumental image is a product of a metaphor machine, where the depiction makes manifest−introduces into our lifeworld−some phenomenon. In some sense, the metaphorical relations behind the image must be built into the device itself in order for it to function. In other words, instruments of this sort have embedded in them theoretical constructs. We might also say that the scientific instrument relies upon prior Mode III coding to produce phenomena to be interpreted in Mode II.

Maps, or models, differ from instrumental traces in that the process of depiction usually involves no physical instantiation of theory (no machine coded to produce the image) but rather discretionary action on the part of a subject. That is, the map is an artifact of someone working in Mode III to produce phenomena to be interpreted in Mode II. By contrast, the typical painting− unlike the map−is an artifact of someone working in Mode II to produce phenomena to be interpreted in Mode II.

Perhaps the most interesting case−that of the VR simulation−is one that aspires to create a kind of substitute Mode II existence. Like the typical instrument, the VR system relies again on Mode III type encodings, yet it must also serve the task of creating not just a depiction but an immersive experience as well. In so doing, I think, the VR simulation can run up against constraints not just from Mode III but also from Mode I. To see how this is the case, let us return to the notion of the technical image as one displaying systematic constraint by means of a metaphorical coding of analogies and disanalogies with our Mode II existence.

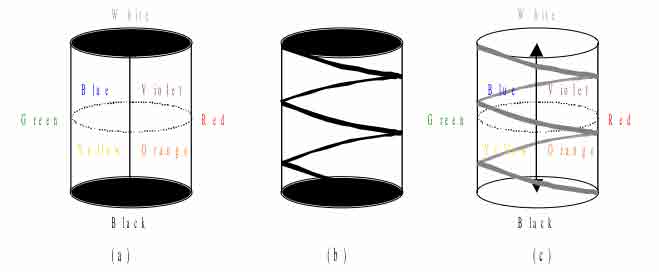

Consider the problem of translating a system of simple auditory tones into a system of simple color tints, or what I will call the 'sight-sound synaesthesia' problem. I will take as given both that perceptual relations as I characterize them require the pre-existence of particular developed mental models and that the relations being mapped only partially capture the phenomena in question. This case thus helps instantiate the reduction process described by Sellars in the shift from manifest to scientific perspective. The translation between sound and color is an incomplete one on both ends (hearing being far more than perception of simple tones and color sight far more than perception of simple tints). We can nonetheless, from a certain scientific standpoint, accurately model each of these phenomena−sound and color−as continua. Thus, a one-to-one mapping of a given range of tonal frequencies onto a given range of hues can easily be achieved. In fact, many different one-to-one mappings of this sort are possible. However, in the manifest view, both of the phenomena in question exist within particular associative structures and are experienced in this more complex relationship. I discussed this issue in detail above regarding the color wheel, or color cylinder, system. A similar model can be formulated for auditory tones, which are (or can be) perceptually related in harmonic cycles−a phenomenon missing from the space of color hues.

Figure 2: Disanalogous Structures of Perceptual Color and Tone: (a) schematic representation of color solid; (b) schematic representation of harmonic tone cycles with vertical distance between cycles indicating octave interval; (c) superposition of color and tone, showing incompleteness of mapping.

Just as we can utilize a cylindrical color structure (Figure 2(a)) to indicate perceptual relations contrast and resemblance among colors, we can utilize a cylindrical surface to map these tonal relations of sound: By representing the tones as a spiraling sequence around the surface of the cylinder (Figure 2(b)), we can capture the perceptual similarity of tones falling into harmonic- octave relationships as well as delineating a continuum of frequency. We can translate this more structured sequence into colors as well, but now only in a more constrained fashion if we wish to maintain the same content. For example, we might map this spiral onto the surface of the standard color cylinder already discussed (Figure 2(c)). Such a mapping would have the useful feature of indicating all tones in harmonic-octave relationships as similar in tint ( i.e. , positioned at an identical radial angle on the cylinder) but different in black-white value (vertical location at a given radial angle). This translational scheme thus offers certain advantages over the simple mapping of continuum to continuum, but it also demonstrates a formal incongruity between sight and sound in systematic structure−a mapping of a phenomenon that is comprehensive (all possible tones fall on the spiral) onto one that is not (not all possible pure tints fall on the spiral). In other words, the denotative significance of a sign within one system is not congruent with that of one within the other. It is just this sort of disanalogy that provides a potential manifest limit on translation between disparate phenomena. The scientific 'model' can restrict itself to primary qualities, abstract codes and equations, and the like, but the scientific 'image' qua image − whether physical or mental−cannot. Even for mental images, this limitation appears for reasons described by Merleau-Ponty: Our mental images are embodied ones and depend on (Mode I) as a possibility space as well as on (Mode II) as an empirical existential lifeworld.

We interact with the technical image, as with any image, in a stereoscopic way, 'seeing double' through both scientific and manifest lenses. Scientifically, the image conveys a complex of data, as when a map allows us to 'see' the state of Germany by means of icons or codes that indicate elements of that technical construct, the German state. In a manifest mode, we also 'see' on the same map aspects of the common sense image of Germany the location: Its qualities of terrain, size, climate, etc. The two image modes are anything but exclusive, yet their practical implications for technical image interpretation are quite distinct. Through systematic encodings, a technical image provides an information medium that should, in a scientific modality, be entirely transparent: Within the corresponding coding framework, the technical image promises to offer unambiguous content with machine-readable objectivity. However, this systematic theoretical role necessarily depends on embodied instantiations. As a result, the more dense the technical coding, the more phenomenally rich the embodied image must correspondingly be. Furthermore, this image−to convey to us−must have a structure we can apprehend, but the phenomena it depicts need not be structured in this way.

Some Current Trends in the Analysis of Nanoimaging

With this perspective established, I will now consider the consequences of my notion of a technical image for several sorts of technical representations of nanospace−the domain of atomic-level phenomena now being explored in contemporary materials engineering. First, I will briefly suggest a symmetry between methods of modeling the entities of the nanoworld and those of our normal experience, insofar as their common object is to establish relevant physical regularities (and, correspondingly, operative constraints on image content). I will also examine some conclusions by two leading investigators of imaging in contemporary technology, Don Ihde and Felice Frankel, to see how they mesh with my own perspective.

So far, I have given only a brief sketch in principle of how the technical image fits into a pragmatic phenomenology of imaging technologies. Now, I will turn to the question of the relationship between imaging phenomena at the atomic scale and imaging on the more familiar 'meso' scale available to bodily perception. My approach here is indebted to the path described by Don Ihde's phenomenological program of instrumental realism, and I find many points of commonality with this work. Ihde's conception of technologies as mediating and extending our manifest world ( Ihde, 1991 ), his criticism of visualism as a basis for rich phenomenal experience ( Ihde, 1999 ), and the non-reductive ontology that characterizes his approach ( Ihde, 2001 ) all resonate fully with my framework. Nonetheless, I am concerned that some of Ihde's recent work might be interpreted as suggesting a kind of symmetry among all scales of technical images.

Specifically, Ihde has recently stated his opinion that virtual reality simulations of the atomic or molecular scale can be regarded as fundamentally equivalent to virtual reality simulations of, for example, piloting an aircraft or viewing our galaxy from above ( Ihde, 2003 ). However, the equivalence Ihde has in mind here is of a different category than the distinctions I am concerned with. Ihde's intention is to indicate that these various simulated spaces are all at present similarly inadequate as phenomenally immersive experiences. These comments are motivated by his commitment to an ontology encompassing an array of sensory modalities beyond the visual, to which I am sympathetic. However, I propose that this orientation has led Ihde to ignore certain details of embodied imagistic simulation that appear even within the visual domain itself. My insistence on difference has instead to do with the process of encoding involved in the creation of a technical image: A technical image is one that creates a functional coding of phenomena by means of visual (and perhaps other) metaphors. In the case of a virtual reality simulation, this encoding attempts to create an immersive environment. In order to refer to functional (or "instrumentally real") phenomena, the simulation must be technical in character. To be immersive, it must also be a multi-sensory image (not just a visual one), and it must create a phenomenologically convincing experience−the formation of a lifeworld.

Now, phenomena at the nanoscale are obviously observable (perceptually accessible) only in a metaphorical sense. Furthermore, the set of phenomena that are relevant at the nanoscale are fundamentally unlike those we perceive at the mesoscale. For example, nanoscale entities (as they are theoretically understood) are not fixed in space or time in the way we normally understand objects, nor as a consequence do they exhibit familiar characteristics such as solidity or texture. Quantum mechanical and quantum electrodynamic properties in particular are uncanny to us in our normal reference frame. Even such phenomena as electrostatic interactions are difficult for us to interpret by means of unaugmented sensory perception. Conversely, at the atomic scale, such familiar phenomena as light and sound have vastly different implications. Thus, a technical image of the nanoscale, constituted through the phenomenal variables of our mesoworld, will incorporate the same kinds of structural disanalogies we observed in the synaesthesia problem in the previous section. The incongruence that matters in this regard is between the nanoworld itself, as object or 'target' of imaging, and the Mode I mesoworld space of human perceptual possibility out of which both the manifest and scientific viewpoints emerge as dual 'sources' of image content. The more comprehensively we attempt to map between the two domains, the more metaphorical leaps of this sort will appear. Given this situation, the achievement of rich nanoscale virtual reality appears to be a tall order.

It thus seems to me that we have several options: (1) Perhaps we are simply stuck with a phenomenological limit on our access to the nanoworld. In this case, the bulk of disanalogies between the nano and meso scales would simply be too great to bridge and no compelling virtual experience of atoms would be possible. (2) Perhaps the best we can do is combine thin functional access to the nanoscale with a rich phenomenal fantasy. This, I think, is what happens in Ihde's equation of the flight simulator to the molecular simulator−the two may be equally convincing as experiences, but are disanalogous in that one closely models the actual experience while the other models something that does not exist. What we effectively have here is a model of nanospace, but not a virtual reality. (3) Perhaps rich phenomenal access to the nano world is possible, but if so it will involve disanalogies with normal experience powerful enough to create substantial incommensurabilty between the domains. In substituting a phenomenal coding capable of functionally representing nanoscale entities for our normal perceptual coding schemes, we would need to step out of one world and into another. This third option is the one I find most satisfying, although I admit the possibility that the first is correct. What I want to argue against (option 2) is the propagation of illusion as the opening of a new domain of phenomenal access.

Ihde's claim of equivalence across scales for virtual reality is, in effect, a tacit criticism of the phenomenal thinness of contemporary practices. The practical equivalence of simplistic single- channel visual simulations of very large, mid-sized, and very small phenomena indicates for him equivalent prospects in each putative virtual reality domain, by introducing more comprehensive modeling to capture relevant details better. But if the view I am forwarding is correct, this is the point where the equivalences end, as the scale regimes impose particular constraints on imagistic translation. On the issue of atomic-level virtual reality simulations, I thus emphasize−as a supplement to Ihde's perspective−an asymmetry between the nanoscale and the meso and macro scales. We are either stuck with a phenomenally thin picture of the nanoscale or we must admit a structural asymmetry introduced by our partial perspective as embodied human investigators (for more on the notion of partial perspective, see Haraway ( 1988 )).

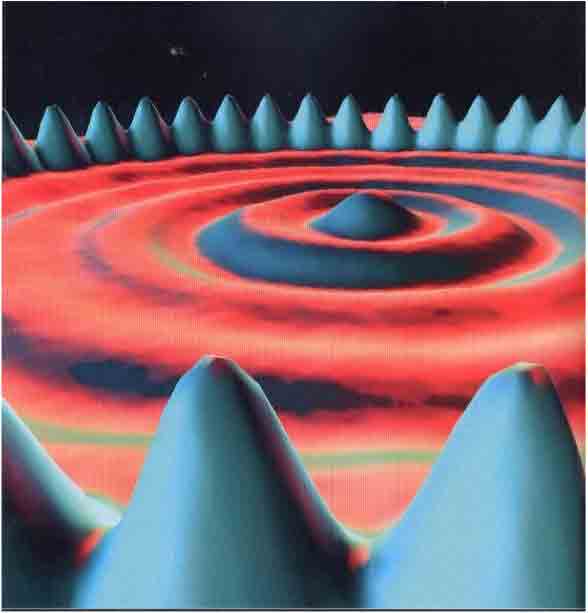

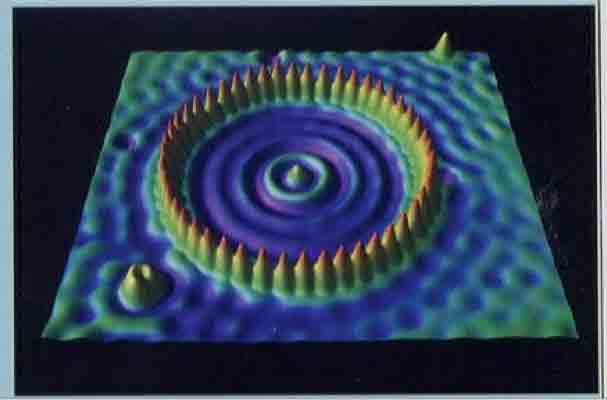

My criticism of Felice Frankel's promotion of a visual culture of science is similarly directed. In a recent contribution to American Scientist , the magazine of the Sigma Xi Scientific Research Society, Frankel interviews two prominent players in atomic-scale imaging−Don Eigler and Dominique Brodbent−and presents their depictions of a so-called 'quantum corral' as "icons" of current scientific practice. These images, which graced the covers of Science and Physics Today , are enhancements of an instrumental trace demonstrating the researchers' capacity to arrange iron atoms in a circle on a copper surface to create a contained pattern of electronic resonance inside. My analysis will proceed by a comparison of various views of the same quantum corral, arguing that the technical content of these images is effectively identical despite their differences in appearance. This will in turn provide an opportunity to consider how contemporary practices of nanoscale imaging approach the problem of providing both scientific and manifest access to their target domain. The images in question are shown in Figures 3, 4, and 5 below. The first of these is a gray-scale image in two parts, showing a rearrangement of atoms over time, from a initial disordered state in Figure 3(a) to an ordered one in 3(b). As a brief aside, we may note that this pairing emphasizes the connection between representing and intervening at this scale advertised in my earlier discussion of Hacking−the image pair shown in this figure not only reflects our ability to intervene among these atoms, but is itself a constitutive element of that intervention. However, the main distinctions I wish to emphasize are between the image in Figure 3(b) and those in Figures 4 and 5. These all represent the same physical configuration of atoms, but the latter two introduce elements of three-dimensional perspective and color that are missing from the black and white overhead view on which they are based.

Figure 3: The 'gray corral' image showing the quantum barrier under, and after construction, using gray scale shading. From Frankel ( 2005 ).

Figure 4: The 'orange corral' image created by Don Eigler for the cover of Science magazine, as reproduced in Frankel (2005).

Figure 5: The 'blue corral' image created by Dominique Brodbent for the cover of Physics Today, as reproduced in Frankel ( 2005 ).

Eigler and Brodbent's products are compelling images, but for largely non-technical reasons. In fact, both researchers describe to Frankel quite vividly the discretionary choices they made to accentuate the phenomenal impact of the data they have imaged. Here are some typical quotes from Frankel's article, with the interviewee identified:

Don Eigler: "I wanted to create an image with as dramatic a perspective as possible... I chose the colors, "lighting conditions" and point of view of the observer to suit the purpose of the moment."

Don Eigler: "I began to apply paint. It was a matter of...searching for the combination of perspective, lighting, surface properties and color that communicated what I wanted to communicate."

Don Eigler: "I wanted others to share in my sense of being an intimate observer of the atoms and the quantum states of the corral."

Dominique Brodbent: "For the blue corral...the separation effect was achieved by coloring the surface by height, using a color map that assigns a single color to a particular height above ground. There are far fewer degrees off freedom to handle compared to the technique described above." (All quotes, Frankel ( 2005 ))Clearly, too, Eigler and Brodbent are describing distinct practices of image enhancement. Eigler's strategy appears, in my terms, entirely non-technical (see Figure 4 ). No trace of systematicity is evident in his process; rather, he characterizes the image formation as a 'search.' His language−of choices, personal communicative desires, and the achievement of intimate acquaintance with the subject is distinctively geared towards a manifest, or Mode II, conception of the image. Furthermore, this conception is itself a fairly thin one; what Eigler describes as the experience of 'being an intimate observer' is precisely the sort of single-channel visual illusion discussed earlier in relation to Ihde's position on virtual reality images. As I will elaborate in my conclusion, this minimalistic conception of observation−what we might call the 'Maxwell's demon' perspective−is more appropriate to a Mode III scientific interpretation than to the purposes of phenomenal acquiantance that Eigler advertises.

Brodbent's approach, by contrast, appears to fit better with my conception of a technical image. However, we should note that the encoding of the blue corral described by Brodbent introduces a color scheme redundantly over an already existing topographic representation of height in black and white (see Figure 5 ). While we might observe that this superposition of color onto the 'terrain' assists the eye in directional orientation, it serves the primary purpose in this image of accentuating rather than adding information. As such, I contend, both Eigler and Brodbent's enhancements leave the technical content of the image unchanged, relative to the more sparse Figure 3(b) . Here too, as with the nanoscale 'virtual reality' situation described above, we are in danger of substituting a compelling illusion for a richer depiction of the domain being imaged. And, of course, this is perfectly understandable when we recognize the purpose of Eigler and Brodbent's corrals as selling their research rather than putting us in a position to experience the nanoscale as such.

Still, we should not confuse rich access to the nanoscale with vivid representation of the nanoscale. Frankel's observations ignore the positive role of technical constraints in favor of an 'aesthetic' approach to image manipulation that does little justice to either the scientific or manifest potential of technical images. Frankel praises the manipulation of nanoscale images by workers like Eigler and Brodbent, as she sees in them parallels with longstanding practices of artistic depiction. On this basis, Frankel argues for a strengthened visual culture in science that will make the objects of the nanoworld more vivid to us. But is strengthened visual culture only a matter of vivid representation? No. It is also a matter of technological access. My objection is based on the premise that such approaches−which doubtlessly do enhance our experience of the images themselves −do little or nothing to enhance our experience of nanoscale phenomena , either from an informational or a phenomenal standpoint. Instead, images of the type Frankel discusses typically use false color, shading, and filters to highlight features already on display rather than to introduce new information. The result, I contend, is a characteristic 'thinness' of nanoimages both informationally and phenomenally. By contrast, an alternative attention to the technical features of nanoimages would push the visual culture toward richer and more functional mappings of nanospace.

Conclusion

We ask our images to provide certain things for us. Problems arise when we mistake what they have provided for something else−for example, 'familiarity' of an image with 'usefulness' of an image. The technical image, as I have defined it, lends itself to certain tasks. In particular, as I have tried to demonstrate, it is conducive to attaining dense transfer of information that is also phenomenally rich. As such, strategies of improvement for technical images must not only attend to theoretical systematicity of encoding but must also take seriously the problem of embodied translation between incommensurate manifest domains like the atomic scale and the human scale. What is demanded by advocates of nanoscale virtual reality and by Eigler's search for observational intimacy is not a disembodied and impotent seat among atomic phenomena but an experiential engagement with them. In other words, virtual nanospace reality asks us to be in a situation like the entities of that regime. The difference between the situation of a Maxwell's demon in nanospace and the situation of an atom in nanospace is one of embodied engagement in the latter case. Transferring our human embodiment down to the level of atoms thus means much more than the capacity to render that domain visually familiar.

Frankel's strategy, for example, succeeds only in a subdomain of the Mode II world: It provides vivid aesthetics to the image−a (largely false) feeling of familiarity and acquaintance−but without an actually rich experience of the relevant phenomena. This, I maintain, is an impoverished conception of what it means to participate in a manifest world. Even this pursuit of compelling illusions, though, indicates an important instinct evident in contemporary scientific work. Researchers at the nanoscale are clearly conscious of the value of Mode II 'lifeworld' engagement with their subject matter, especially when it comes to conveying these results to the lay public. What they appear to have failed to recognize, though, are the full technical implications of this desideratum in relation to 'scientific' ones. Engagement with the manifest, phenomenal world is not merely about vividness ("That's a striking red") or valence ("I love that shade of red"), but also contextual interpretation−the experiential relationship of phenomena, such as color contrasts or sonic harmonies (resemblances) in my synaesthesia example. The significance of an image emerges within a perceptual structure of such relationships, and these too are elements of our embodied Mode II worldview.

My argument, then, has essentially been about what we mean, and what we might mean, when we claim that we can now 'see' atoms or 'gain access to' the nanoscale. I want to hold claims of this sort to a fairly high standard. By deploying the notion of a technical image, I intend to indicate a pathway towards a richer use of phenomenological perspectives in technoscientific work. By focusing on the specific constraints required to encode functional properties of phenomena in images, I hope to encourage the development of a stronger tradition of imaging (like Frankel). I also believe, like Ihde, that such a tradition−to give us rich manifest experience of a lifeworld− must be one that transcends the limits of visual phenomena in favor of a more comprehensive semiotic positioning of the observer in a perceptual space. My criticisms have been directed at a seeming credulity about what a richer phenomenal experience consists of. On the one hand, Frankel appears to license the introduction of convincing but fantastic elements into images purporting to represent a novel space. On the other, Ihde's comprehensive skepticism about the state of the art in virtual reality may obscure important differences between imaging at different scales. By contrast, I want to advocate a strict standard−by analogy with cartography−for representation and intervention at the atomic scale, not merely as a corrective to nanohype but also to encourage the production of images that can better convey to us the pragmatic possibilities for us to incorporate aspects of the nanoworld into our perceptual frame. I also want to insist on ineliminable asymmetries between the nanoscale and our normal scale of perceptual experience. These can be understood as variations in the visually metaphorical character of representations of the two regimes.

I employ the notion of a 'technical image' to argue for an interpretation of instrumental imagery that relies less on concerns about truth or reality than about those of efficacy. Accompanying this perspective is a set of normative considerations that impose constraints on what a proper technological image should be. These include an understanding of images as semiotic systems capable of conveying claims, attention to interpretive flexibility and its limits in actual images, norms of minimalism and coherence associated with such images, and a contraposed norm that militates for maximal coupling between imaging codes and our perceptual capacities. In these terms, we can view the problem of imaging nanospace as reliant upon levels of constraint in visual codes, levels of phenomenal detail involved in such coding, and other related issues. In short, I hope to replace discussions of realism with discussions of efficacy, or of functionalism of structure and properties, in the domain of images.

References

Baird, D. 2004. Thing Knowledge: A Philosophy of Scientific Instruments. University of California Press.

Carroll, N. 1994. "Visual Metaphor," Aspects of Metaphor , J. Hintikka (ed.), Kluwer Academic Publishers, 189-218.

Daston, L., and P. Galison. 2007. Objectivity. Zone Books.

Dreyfus, H. L., and S.J. Todes. 1962. "The Three Worlds of Merleau-Ponty," Philosophy and Phenomenological Research 22(4): 559-565.

Goodman, N. 1976. Languages of Art: An approach to a theory of symbols. Hackett Publishing.

Frankel, F. 2005. "Sightings," American Scientist 93(3, May-June).

Hacking, I. 1983. Representing and Intervening: Introductory topics in the philosophy of natural science. Cambridge University Press.

Haraway, D. 1988. “Situated Knowledges: The Science Question in Feminism and the Privilege of Partial Perspective,” In Feminist Studies 14(3): 575-599.

Heelan, P. 1983. Space-Perception and the Philosophy of Science. University of California Press.

Ihde, D. 1991. Instrumental Realism: The interface between philosophy of science and philosophy of technology. Indiana University Press.

Ihde, D. 1999. Expanding Hermeneutics: Visualism in Science. Northwestern University Press.

Ihde, D. 2001. Bodies in Technology (Electronic Mediations). University of Minnesota Press.

Ihde, D. 2003. “Simulation and Embodiment,” 4S Conference (Atlanta, GA).

Lakoff, G., and M. Johnson. 1980. Metaphors We Live By. University of Chicago Press.

Lakoff, G., and M. Johnson. 1999. Philosophy in the Flesh: The Embodied Mind and Its Challenge to Western Thought. Basic Books.

Merleau-Ponty, M. 1945/1962. Phenomenology of Perception , translated by C. Smith, Routledge and Kegan Paul.

Pitt, J.C. 2004. “The Epistemology of the Very Small,” In Discovering the Nanoscale , D. Baird, A.Nordmann, and J. Schummer (eds.). IOS Press, 157-163.

St. Clair, R.N. 2000. “Visual Metaphor, Cultural Knowledge, and the New Rhetoric,” In Learn in Beauty: Indigenous Education for a New Century , J. Reyhner, J. Martin, L. Lockard, and W. S. Gilbert (eds.), 85-101.

Seitz, J.A. 1998. “Nonverbal Metaphor: A Review of Theories and Evidence,” Genetic, Social, and General Psychology Monographs 124(1): 95-119.

Sellars, W. 1963. “Philosophy and the Scientific Image of Man,” In Science, Perception and Reality . Humanities Press.

Acknowledgments

Figure 1: Courtesy of Bethesda Softworks.

Figure 2: Courtesy of Tim Davidson and Ted Lai.