JITE v43n2 - Testing Equals Relevance in Technology Education

Testing Equals Relevance in Technology Education

Steve Rogers

Walker Career CenterThe current climate in education suggests that two items are sovereign in schools: assessment and accountability. The passage of the No Child Left Behind Act of 2001 (NCLB) required states to set up methods of assessment and accountability ( NCLB, 2001 ). The president of the International Technology Education Association, Ken Starkman (2006) contends, “Most educators see accountability as queen and testing as king of this legislation” ( p. 28 ). Now that every state has an assessment and accountability system, we must ask ourselves, where does technology education fit into these systems? As a profession we need to acknowledge that in education today testing equals relevance. Therefore, in order to be recognized as a mainstream, significant field, we should push for state standardized tests in technology education.

Assessment and Accountability Background

According to Linn (2000) assessment and accountability have played prominent roles in many of the education reform efforts implemented during the past 50 years. In the 1950s, testing was employed to select students for higher education and to identify students for gifted programs. By the mid-1960s test results were used as one measure to evaluate the effectiveness of Title I and other federal programs. In the 1970s and early 1980s, the minimum competency testing movement spread rapidly; 34 states instituted some sort of testing of basic skills as a graduation requirement. The late 1980s and early 1990s saw the continuation and expansion of the use of standardized test results for accountability purposes.

With the passing of NCLB in 2001, schools are now held accountable for student achievement and must show that their students make adequate yearly progress (AYP). Schools that are unable to accomplish this task face a number of consequences.

Currently, most states measure AYP through standardized tests. These are appealing to policy makers for several reasons: Testing is relatively inexpensive compared to making program changes, they can be externally mandated, they can be implemented rapidly, and they offer visible results ( Linn, 2000 ).

Accountability refers to the premise that schools are responsible for the learning and academic achievement of all their students. Accountability is documented in a variety of ways, including summative and formative measures, standardized tests, and sometimes performance-based assessments of student learning. Accountability is not simply about reporting results; it also dictates negative and positive consequences for the results.

The current educational discussion about accountability emphasizes three underlying principles: (a) that content standards serve as the basis of assessment and accountability, (b) that performance standards are used to evaluate student learning, and (c) that high-stakes consequences are tied to accountability measures for students, teachers, and schools ( Linn, 2000 ).

Standardized Tests

Standardized tests can be categorized into two major types, norm-referenced tests and criterion-referenced tests. These two tests differ in their intended purpose, the way in which their content is selected, and their scoring process, which defines how the test results must be interpreted.

The major reason for using a norm-referenced test is to classify students. Norm-referenced tests are designed to highlight achievement differences between and among students in order to produce a dependable rank order of students across a continuum of achievement from high achievers to low achievers. School systems might want to classify students in this way so that they can place the students in appropriate remedial or gifted programs. These types of tests are also used to help teachers select students for different ability-level reading or mathematics instructional groups ( Bond, 1996 ).

While norm-referenced tests ascertain the rank of students, criterion-referenced tests determine "...what test takers can do and what they know, not how they compare to others” ( Anastasi, 1988, p. 102 ). Criterion-referenced tests report how well students are doing relative to a pre-determined performance level on a specified set of educational goals or outcomes included in the school, district, or state curriculum.

Test content forms an important distinction between a norm-referenced and a criterion-referenced test. The content of a norm-referenced test is selected according to how well it ranks students from high achievers to low. The content of a criterion-referenced test is focused on how well it matches the learning outcomes deemed most important. Although no test can measure everything of importance, the content selected for the criterion-referenced test is selected on the basis of its significance in the curriculum while that of the norm-referenced test is chosen by how well it discriminates among students ( Bond, 1996 ).

Current State Assessments in Technology Education

Based on a survey of the education websites of fifty states and the District of Columbia, only two states—Massachusetts and New York—have any direct assessment of technology education. The state of Kentucky also assesses technology education, but only indirectly by testing practical living and vocational skills.

The assessment of technology education in Massachusetts began with the 2001 Massachusetts Science and Technology/Engineering Curriculum Framework . The 2001 framework, for the first time, articulated standards for full-year high school courses in technology/ engineering. The framework identified a subset of core standards for each course that were designed to serve as the basis for the Massachusetts Comprehensive Assessment System (MCAS) ( Massachusetts Board of Education, 2006 ).

The MCAS test is a criterion-referenced test that covers the four major content areas of English/language arts, mathematics, science and technology/engineering, history and social science ( Massachusetts Board of Education, 1998 ). The technology/engineering area is tested in grades 4, 8, and 10. The questions at each level of the engineering/technology test focus on the design process and on understanding and using technology. Key questions include items which ask, How does this work? How can this be done? How can this be done better? Figure 1 provides a sample MCAS test question.

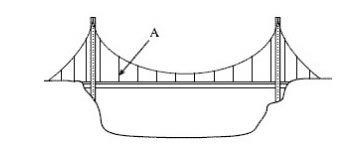

FIGURE 1The figure below shows a pictorial model of a highway bridge.

What is the primary structural action of member A?

A. compression

B. shear

C. tension

D. torsion

( Massachusetts Board of Education, 2005, p. 3. )

The state of New York directly tests technology education as well. However, New York only tests at the intermediate or middle school level through program evaluation tests. The school districts of New York identified the essential knowledge covered in New York’s technology education classes and the assessment is designed to help districts identify the strengths and weaknesses of their overall program. With this purpose in mind, individual student scores are evaluated to discoverer if the essential knowledge identified by the districts has been successfully taught.

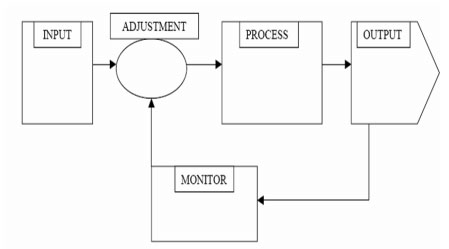

FIGURE 216. The systems model is used to explain how systems work. Select one system type from the list below and use the systems model to explain it.

System type _____________________________________

- Home Heating system

- Automobile cooling

- Residential electrical system

- Hydroponics growing system

Write in the spaces provided, the specific parts of the system you chose from the list above

Note: A bullet was used instead of a shamrock in the unordered list for this figure for increased browser compatibility.(New York State Department of Education, 2000b, p. 5.)

The New York Intermediate Assessment in Technology covers the following areas: engineering design, tools, resources and technological processes, computer technology, technological systems, history and evolution of technology, impacts of technology, and management of technology ( New York Department of Education, 2000a ). These areas are tested using multiple choice and short answer questions. Figure 2 shows an example of a question from the New York Intermediate Assessment in Technology ( New York Department of Education, 2000b ).

Kentucky’s testing system, the Commonwealth Accountability and Testing System, tests students in the seven core content areas of reading, mathematics, science, social studies, arts and humanities, practical living/vocational studies, and writing. These tests are criterion-referenced tests that are administered at various grade levels. The practical living/vocational studies areas are tested in grades 5, 8, and 10. The topics included are jobs/careers, selecting and preparing for a career, work habits, skills for success, and postsecondary opportunities ( Kentucky Department of Education, 2004 ).

Other states that don’t directly test technology education nevertheless seem to assume a level of technological literacy in their students. According to the Delaware Student Testing Program, their tests are designed to (a) serve as a measure of progress toward the Delaware content standards and (b) ensure that students can apply their academic skills to realistic, everyday problems ( Delaware Department of Education, 2004 ).

These annual Delaware tests evaluate reading, writing, and mathematics in grades 2-10 with additional science and social studies tests administered in grades 8 and 11. While the state of Delaware tests five content areas, it does not specifically test technology education. Nevertheless, its second stated goal, to ensure that students have the ability to solve everyday, real-world problems, seems to imply an emphasis on technological literacy.

Conclusion

The International Technology Education Association’s (ITEA) Standards for Technological Literacy defines technology as "how humans modify the world around them to meet their needs and wants, or to solve practical problems" ( ITEA, 2000 ). To master the knowledge and ability to adapt and modify our world is what we, as technology educators, strive to teach our students. Assessing a student’s grasp of this ability is difficult, but it is not impossible.

According to Benenson (2002) , “the proliferation of testing is difficult to resist, and more and more classroom time is devoted to teaching to the test” ( pg. 52 ). Like it or not, this is the environment in which we currently teach and we must become part of it or be left behind. Starkman (2006) advocates assessment. He maintains, “There is no question that accountability and testing are here to stay…” ( pg. 28 ). Instead of resisting state assessments, we must embrace them.

Other states should follow the lead Massachusetts and implement state-wide assessment tests in technology education. These tests should be criterion-referenced tests. However, these tests should not be tied to any high-stakes testing programs, nor should they be used for graduation requirements. States should base the tests on both the Standards for Technological Literacy as well as their current state standards for technology education.

As a profession we have choices to make. We can accept the status quo or we can change. Now is the time to advocate for change and embrace the current trend of standardized testing by insisting that our states add a criterion-referenced test in technology education. The exams would show to students, parents, teachers, and administrators what we already know, that technology education is relevant and accountable in today’s educational climate.

References

Anastasi, A. (1988). Psychological Testing. New York, New York: MacMillan Publishing Company.

Benenson, G. & Piggott, F. (2002). Introducing Technology as a School Subject: A Collaborative Design Challenge for Educators. Journal of Industrial Teacher Education, 39 (3), 48-64.

Bond, Linda A. (1996). Norm- and Criterion-Referenced Testing. ERIC Clearinghouse on Assessment and Evaluation . Washington, D.C.

Delaware Department of Education (2004). Delaware Student Testing Program: A score results guide for educators . Retrieved March 7, 2006 from http://www.doe.state.de.us/aab . Dover, DL: Author.

International Technology Education Association. (2000). Standards for technological literacy: Content for the study of technology . Reston, VA: Author.

Kentucky Department of Education (2004). Kentucky Core Content Tests: Based on the Analysis of Date from the 2001-2002 School Year . Retrieved March 6, 2006 from http://www.education.ky.gov/KDE . Frankfort, KY: Author.

Linn, R. (2000).Assessments and Accountability. Educational Researcher, 29 (2), 4- 16.

Massachusetts Board of Education (1998). Guide to the Massachusetts Comprehensive Assessment System . Retrieved February 8, 2006 from http://www.doe.mass.edu/mcas/ . Boston, MA: Author.

Massachusetts Board of Education (2005). Massachusetts Comprehensive Assessment System Spring 2005 Release of Test Items: XV Technology/Engineering Grade 9/10. Retrieved February 8, 2006 from http://www.doe.mass.edu/mcas/ . Boston, MA: Author.

Massachusetts Board of Education (2006). Massachusetts Science and Technology/Engineering High School Standards. Retrieved February 8, 2006 from http://www.doe.mass.edu/mcas/ . Boston, MA: Author.

New York State Department of Education (2000a). Guide to the Intermediate Assessment in Technology. Retrieved February 8, 2006 from http://www.emsc.nysed.gov/osa/tech/home.shtml . Albany, NY: Author.

New York State Department of Education (2000b). Guide to the Intermediate Assessment in Technology: Sample Questions. Retrieved February 8, 2006 from http://www.emsc.nysed.gov/osa/tech/home.shtml . Albany, NY: Author.

No Child Left Behind Act of 2001. Public Law No: 107-110, 10th Cong. (2001).

Starkman, K. (2006). President’s Message: Forward. The Technology Teacher, 65(6), 28-30.

_______________

Rogers is a Project Lead The Way teacher in technology education at the Walker Career Center in Indianapolis, Indiana and a graduate student at Purdue University in West Lafayette, Indiana. He can be reached at srogers@warren.k12.in.us .